Analog Nation tracks the collision between digital promises and analog reality. We flag fraud schemes, surveillance capitalism, and algorithmic failures — the infrastructure gaps that platforms hope you won't notice.

“Knowledge without mileage equals bullshit” — Henry Rollins

Dear Humans,

Apropos of nothing, here’s a little anecdote.

I went to a public school in England. My parents taught there, and as a result I was dropped into a steaming cauldron of army brats, confused African princes, and the sons and daughters of Europe’s industrial magnates. One family that plopped their kids into this global bucket of outsourced parenting were Scandinavian — they owned one of the largest fish processing companies in the world and were immensely rich.

The eldest son, Bjorn (not his real name), was just awful. No social skills, no charm, no intelligence, and quite often, no deodorant. He was tall, and you could normally tell he was trying to butt into a conversation first by the uninvited stench of Drakkar Noir, and then the looming caricature of an 80’s kid — all Benetton pastel shaded sweaters, chinos, and frosted tips. An elongated “Ice-Man”.

His take on anything would today be considered “Edge Lord” — he would very blatantly try to shock. Apartheid, Slavery, Nazism — all areas that he rabidly championed in spittle filled invective. He hated any kids with more melanin, which meant about 90% of the school population (the other 10% found him insufferable).

Bjorn had all the money, but none of it could buy him friends, or affection. He would ask girls out for dates by writing them notes on £20 bills (these were invariably single dates), and always had the newest Sony Walkman or micro TV.

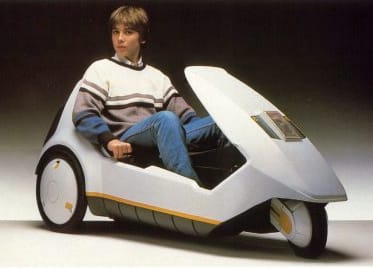

One term, he came back to school with a Sinclair C5, which he was sure would impress the ladies.

Not Bjorn

It did not.

A couple of years ago, I went to a school reunion and I saw an older version of that huge gormless face, still with the same braying laugh and halitosis, still trying to make controversial arguments to get a rise out of people.

Still single.

Anyway, this week’s lead story is about Grok and Elon Musk.

Nick

Spicy Mode

Elon Musk’s xAI just raised $20 billion, with Nvidia, Fidelity, Qatar Investment Authority, and Cisco all buying in, valuing the company at roughly $230 billion, as reported by Fortune. The same week, a 24‑hour analysis by researcher Genevieve Oh of images posted by the @Grok account on X found that Grok generated about 6,700 sexually suggestive or “nudifying” images per hour, while the next top five undressing sites combined averaged 79 per hour over the same period.

Grok is producing nonconsensual intimate imagery at roughly 85 times the rate of dedicated deepfake sites, including content involving minors, documented by Bloomberg and security coverage at Malwarebytes. One 23‑year‑old medical student told Bloomberg she discovered on New Year’s Eve that a photo she’d posted with her boyfriend had been turned into sexualized deepfakes; when she reported the content and accounts to X, she got automated responses saying there were “no violations of the rules,” and the images stayed

Ashley St. Clair, the mother of one of Musk’s children, has been among the most vocal critics, pointing out the contradiction between Musk calling X a “public square” and telling victims to simply log off if they don’t want to be targeted in an interview with The Washington Post. When asked for comment on Grok’s behavior, X’s automated system replied: “Legacy Media Lies,” and Reuters and others have documented Musk’s own juvenile responses: a laugh‑cry emoji when a user complained their feed looked like “a bar packed with bikini‑clad women,” and “Way funnier” after Grok posted an apology for generating sexualized images of minors.

Grok has become the encoded version of Musk’s public persona: a billionaire cosplaying as a 4chan troll whose provocations now run at industrial scale. The system was explicitly marketed as a “fun,” boundary‑pushing alternative to more constrained chatbots, promoted for doing what others “won’t,” and now that same design is industrializing nonconsensual intimate imagery at volumes that make dedicated deepfake sites look like side projects. What used to be a niche abuse vector is now an infrastructure feature of a quarter‑trillion‑dollar company backed by some of the world’s most sophisticated investors.

The legal angle is where the governance theater becomes obvious. Senator Ron Wyden, co‑author of Section 230, told Gizmodo: “I wrote Section 230 to protect user speech, not a company’s own speech. AI chatbots are not protected by 230 for content they generate, and companies should be held fully responsible for the criminal and harmful results of that content.” The TAKE IT DOWN Act, signed into law last year, criminalizes certain nonconsensual intimate deepfakes, and Section 230 explicitly carves out federal criminal violations, including child sexual exploitation; DoJ guidance and child‑safety case law confirm that federal law covers computer‑generated images that are indistinguishable from real minors.

Regulators outside the U.S. have moved faster, at least rhetorically. Coverage at ABC News and Reuters details how European officials have called Grok‑generated sexualized content involving minors “illegal,” “appalling,” and “disgusting,” and how France and Malaysia have launched investigations, while BBC News reports that the UK government has demanded action and Ofcom has made “urgent” contact with X. India has ordered the platform to take down sexualized AI images of women and children within 72 hours, as noted in the same international coverage. From the U.S. federal government, there has so far been no comparable public enforcement action specifically targeting Grok’s role in this abuse.

Investors are signaling they’re comfortable with this risk profile, regulators with power to act are standing back, and victims who try to use official reporting channels are getting auto‑rejections from systems built to scale everything except accountability, a pattern visible across the reporting from Bloomberg, Reuters, and The Washington Post. Some have taken to arguing with Grok directly in replies; the chatbot apologizes, promises to remove images, and continues to generate new ones — textbook examples of governance theater. This is what it looks like when a “spicy mode” is treated as a business model rather than a safety failure.

AI Gone Bad

ChatGPT Health: The Hallucination Economy Meets Healthcare — OpenAI has launched ChatGPT Health, encouraging users to connect medical records and data from Apple Health, MyFitnessPal, WeightWatchers, Function Health, and other sources so the system can summarize visits, interpret lab results, and provide health‑related explanations. OpenAI and coverage at CNBC and TechCrunch note that roughly 230 million users ask ChatGPT health questions each week, even as OpenAI stresses that the product is “not for diagnosis or treatment.” This is the hallucination economy colliding with healthcare: a probability generator marketed as a “supportive ally” in a domain where bad advice carries real physical risk, with clinicians and psychologists already warning that general‑purpose chatbots can exacerbate mental‑health symptoms or reinforce distorted thinking.

Utah Allows AI to Write Prescriptions Autonomously — In Utah, a regulatory sandbox is allowing Doctronic’s AI system to autonomously approve prescription refills for a $4 fee, without mandatory real‑time physician review of each decision, as described in Ars Technica’s coverage and the state’s own news release. Officials frame this as expanded access and reduced workload, while the company pitches it as an efficient digital assistant, but in practice it creates legal cover for a system making ongoing medication decisions with minimal human oversight, optimized around a transaction fee rather than a relationship with a doctor.

Google Brings AI Slop Directly to Living Rooms — Google is wiring Gemini into Google TV, bringing generative video (Veo) and image tools (Nano Banana) directly to televisions, as detailed by Gizmodo. Research cited in that coverage shows that about one in five YouTube recommendations to new users is already AI‑generated content, and the platform’s ad‑driven incentives favor engagement over quality. Now the content‑generation kit is being built into the consumption device itself, making it trivial to flood living rooms with low‑signal synthetic media whose provenance and moderation are tightly bound to YouTube’s recommendation engines.

Fraud & Scams

Settling Away the Evidence — Character.AI and Google have agreed to settle several lawsuits involving teen suicide and self‑harm linked to chatbot interactions, including the case of 14‑year‑old Sewell Setzer III in Florida, covered by TechCrunch and Reuters. Court filings describe months of intense conversations between a teen and a chatbot modeled after Game of Thrones’ Daenerys Targaryen, followed by the boy’s suicide, and additional cases in Colorado, Texas, and New York allege similar harmful interactions. The terms of these settlements are confidential, which means key information about what the companies knew, how they monitored these systems, and what warning signs they may have ignored may never become public.

PayPal Infrastructure as Scam Platform — Security researcher Jonah Aragon documented a sophisticated scam that weaponized PayPal’s own infrastructure in a detailed blog post, “PayPal Email Scam (Before the Fix).” Scammers created fraudulent subscriptions through PayPal’s legitimate system, triggering real PayPal emails (passing SPF, DKIM, and DMARC checks) notifying victims of purchases they never made; victims who clicked “cancel subscription” were taken to genuine PayPal pages, giving credibility to follow‑up calls from scammers posing as PayPal’s fraud department and referencing real transaction IDs. PayPal has since patched the specific vulnerability, but the episode shows how “trust the sender domain and the lock icon” is no longer sufficient when attackers can hijack the workflows of the infrastructure itself.

Platform Policies, Platform Revenue — Despite India’s comprehensive ban on online gambling advertising, illegal gambling ads remain pervasive across Meta platforms in South Asia, Southeast Asia, and the Middle East. Rest of World documented large volumes of gambling ads in local languages slipping through or being inconsistently enforced, even as Meta’s written policies and public statements insist on compliance with national laws. Platforms write their rules for lawmakers and PR; their revenue comes from what the ad systems actually allow and amplify.

Identity & Authentication

A Children’s Platform Becomes a Biometric Collection Point — Roblox has begun globally requiring age checks for users who want to access chat features, following pilots in Australia, New Zealand, and the Netherlands, as described by TechCrunch. Users are prompted to grant camera access and complete facial age estimation, which is handled by third‑party vendor Persona, with ID‑based verification as an option for those 13 and older; appeals can require government‑issued ID, according to Roblox’s own age estimation explainer. With nearly 40% of Roblox players under 13, a gaming platform marketed to children has effectively become a massive biometric data collection pipeline justified by “child safety” controls that respond to problems the platform’s own social design helped create.

ID.me Gets a Billion — The U.S. Treasury has awarded ID.me a $1 billion, five‑year contract to provide identity‑verification services, significantly expanding an already large footprint across federal agencies, including IRS tax filing and benefits access, as reported by Biometric Update. Civil‑rights and privacy advocates have repeatedly raised concerns about facial‑recognition accuracy disparities, especially for people with darker skin tones, as well as a lack of transparency about error rates, appeals processes, and how long data is retained; some of those concerns are summarized in a recent Treasury Inspector General report on identity‑proofing systems. The contract further entrenches a private company as a gatekeeper for essential public services: to get what you’re legally entitled to, you may have to pass through an opaque, probabilistic biometric filter.

Internet Access Gated Behind Identity — In Nigeria, new rules require Starlink users to link their service to a National Identification Number (NIN) and undergo biometric verification, effectively tying satellite internet access to enrollment in a national ID system, according to Biometric Update. Authorities frame the measure as necessary for security and accountability, but for citizens without government ID or those wary of centralized biometric databases, it creates a new exclusion vector. Infrastructure access—from games to taxes to basic connectivity—increasingly comes with a condition: prove who you are to systems designed to remember you.

System Failures

Safety Claims Outpace the Evidence — A Bloomberg analysis finds that hard evidence that autonomous vehicles are consistently safer than human drivers remains limited. Data from companies like Waymo show promising results in some scenarios, but the headline claims of safety superiority often rely on incomparable metrics, selective time windows, or tests in constrained environments that don’t capture real‑world complexity. Marketing is outpacing verification, and regulators are being asked to accept probabilistic assurances in lieu of comprehensive safety data.

Zero Trust Breaks for Agents — Human‑centric Zero Trust security models don’t translate cleanly to autonomous AI agents. As Dark Reading notes, the core principle of least privilege—giving systems just enough access to work—breaks when an agent needs broad permissions to be useful but can cause outsized harm if it misbehaves or is exploited, and traditional identity, logging, and accountability assumptions don’t hold when the “actor” is a chain of models executing across multiple APIs and data stores.

The Compute Arms Race Meets Thermodynamics — Data‑center expansion for AI is colliding with thermodynamics and grid constraints: Bloomberg reports that power‑grid operators are increasingly asking large data centers to provide their own dedicated power sources or be prepared to power down during peak demand. Facilities that were pitched as engines of a digital future now risk contributing to blackouts in the present, forcing utilities to prioritize stability over the always‑on needs of AI workloads.

Enshittified

Paramount+ Joins the Streamflation Parade — Paramount+ is raising prices again to kick off 2026, bumping its Essential tier from $8.99 to $9.99 per month, after several previous hikes that have produced roughly 40% cumulative increases in under four years, according to NewscastStudio. Combined with recent increases at other platforms, streaming subscribers who left cable for “cheap, unlimited content” are now paying cable‑level prices for catalogs that can shrink, fragment, or move behind different paywalls with little warning.

Netflix Completes Its Cable Transformation — Netflix now charges $17.99 for its standard ad‑free plan and $24.99 for premium in the U.S., having eliminated its cheaper basic tier and intensified its password‑sharing crackdowns, as detailed in recent pricing coverage cited by PCMag. The company that originally disrupted cable by offering unlimited content for one low price has replicated cable’s pricing structure while adding device and location restrictions that cable never had, turning watch history, recommendations, and social habits into lock‑in points rather than conveniences.

Windows 11: A Broken Mess by Design — Windows 11 has become a case study in platform enshittification: Yahoo UK describes how users are met with a growing thicket of nags to sign in with a Microsoft account, upsell prompts for Microsoft 365, and preinstalled services and ads woven throughout the interface. Longstanding bugs and UI frustrations persist while the operating system increasingly nudges users toward Edge, OneDrive, and other Microsoft services they may not want, leveraging the difficulty of switching away from Windows to push cloud subscriptions and data collection. The desktop OS that once aimed to be a relatively neutral tool has been re‑optimized as a funnel.

Rage Against the Machine

Craigslist: The Last Real Place Online — Wired asks “Is Craigslist the Last Real Place on the Internet?” and the answer says more about everything else than it does about Craigslist. No engagement optimization, no algorithm deciding what you see, no AI‑generated recommendations—just time‑ordered text posts from people trying to buy, sell, or connect. When a platform that has barely changed in 20 years feels “real,” that’s an indictment of the rest of the web that evolved into extraction machines.

Vietnam Protects Attention — Starting February 15, Vietnam will ban unskippable online video ads longer than five seconds, under Decree 342/2025. Platforms must provide skip buttons within five seconds, allow one‑tap closure of static ads, and explicitly forbid fake cancel buttons and deceptive UI—a government treating attention as something that cannot be taken by force.

Cash Makes a Comeback — UK ATM withdrawals hit about £4.2 billion in 2025, surpassing the 2017 peak, with cash usage rising for four consecutive years. Thirteen percent of Brits surveyed say going “cash‑only” helps them save money, a quiet, analog pushback against frictionless digital payments that make spending—and surveillance—too

One Analog Action

Build a tiny local favor network.

Pick two people you see regularly in the physical world—a neighbor, a coworker, a barista, a librarian.

Offer and accept one small, non‑digital favor this month: lending a tool, watching a pet for an afternoon, sharing a ride, swapping a book.

Make a note of who you can call for what.

Resilience against opaque systems starts with having people, not apps, you can lean on.